A Seed

A Seed

Seed is interchangeable with TTGO T-Watch.

An open source hardware alternative to motion capture

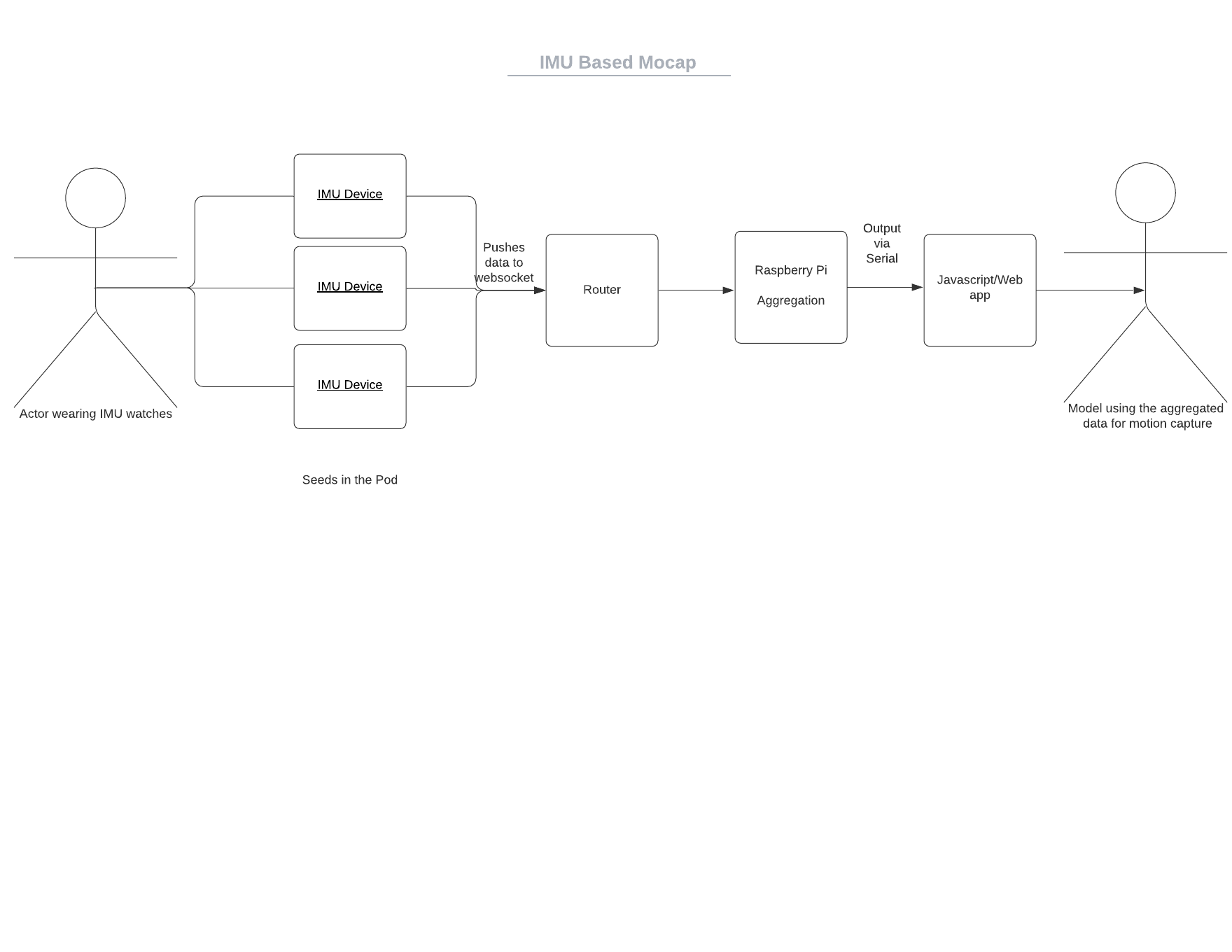

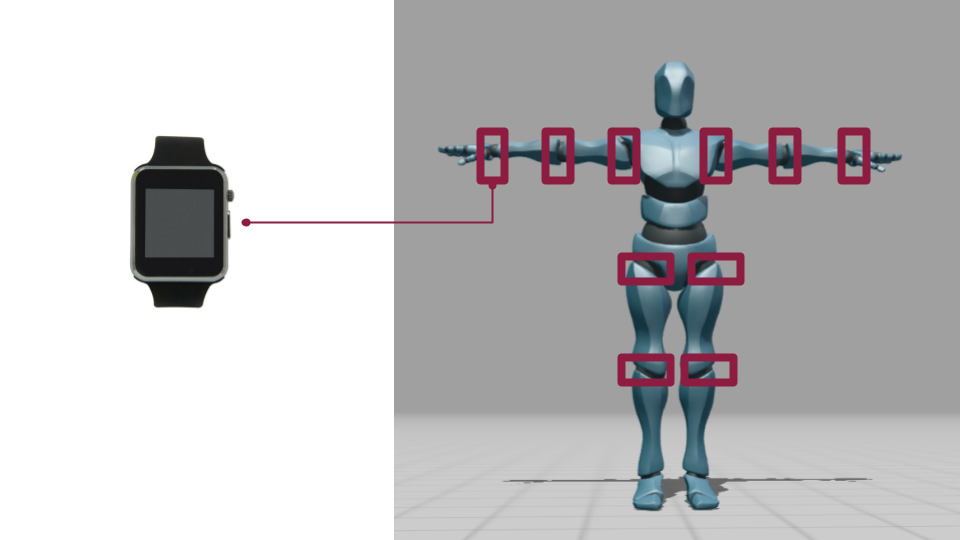

The overall scope of Mesquite Mocap is to make an open-source, low-cost motion capture process using IMU devices and javascript. A traditional mocap set up can cost upwards of $100k. Each IMU device starts at $35 dollars. By creating a system of many TTGO T-Watches, we can rig 3D models using motion data. This project is open-source and available for community developers and artists. Anyone can access the code, add to the code, or change it to their needs. All Mesquite Mocap code is under the GNU General Public License.

There are multiple applications for this system: understanding movement, extending movement, and mapping movement to name a few. The uses of this system range depending on user specified projects. The code base is meant to be flexible enough to incorporate into a wide variety of applications.

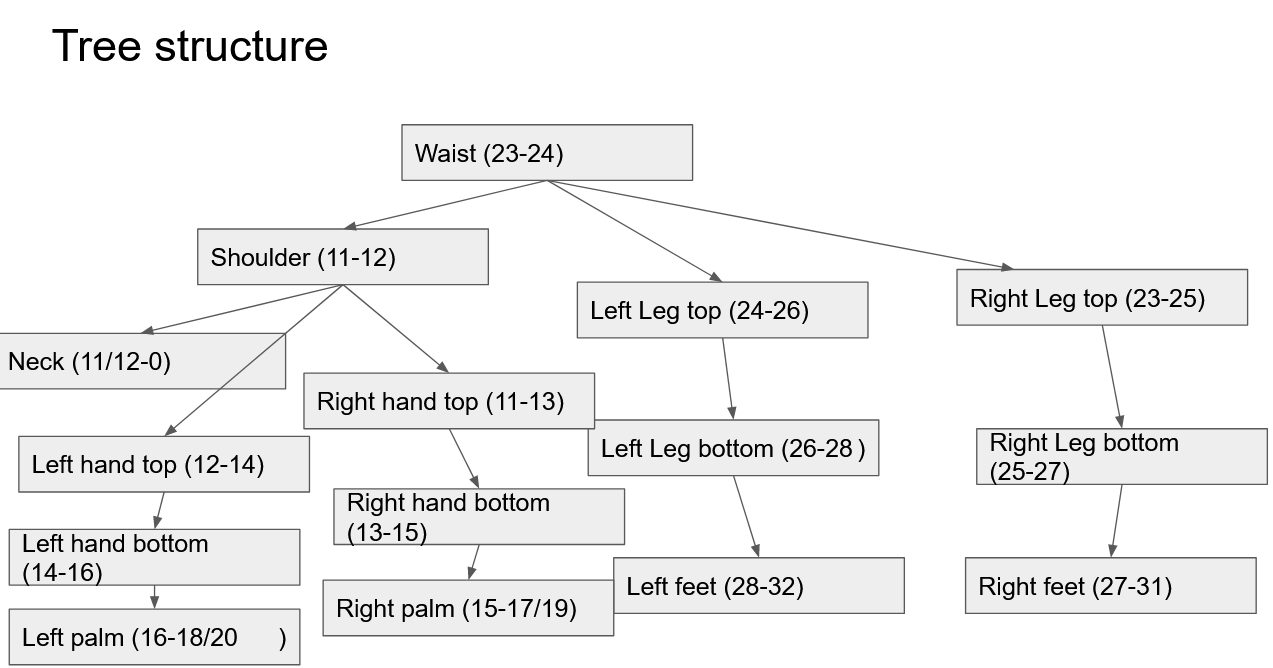

Each Mesquite Mocap system consists of many seeds (the IMU watches) that form a pod (aggregate of watches). When there are systems with multiple mocap suits, then this becomes a tree. Going forward:

A Seed

A Seed

Seed is interchangeable with TTGO T-Watch.

A Pod

A Pod

A pod is interchangeable with an individual's full mocap suit

A Tree

A Tree

A tree interchangeable with a system of multiple mocap suits

This is the high level overview of the technical implementation of this project.

To get started on this project, you need to have the following hardware.

You can purchase this watch from AliExpress or Amazon.

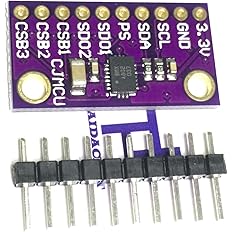

Soldered onto the watch hardware, you will need the bno08x chip added to the watch.

You can purchase this from Ali Express or Amazon.

You will need a Raspberry Pi to aggregate the data and push it to the machine running the modeling script.

You can purchase this from the Raspberry Pi website. There are a few links to various purchasing options; you can pick the option that is right for you.

You will need some basic router to allow the Seeds to push data to the Raspberri Pi for aggregation. Most will work. You can find the soldering guide in our quickstart page.

You can find the soldering guide on the Quick Start page.

To use this method, you need access to:

If you're looking for specific ReadMe.m, we have them updated in the Git Repo. The repos you're going to use are: